Negative Samples

What are negative samples?

Negative samples are data that do not contain any cases of the object or information the model seeks. Properly incorporating these samples into training is often an essential strategy for reducing false positives. We frequently want to include these data in training, validation, and testing datasets.

The use of negative samples typically applies to detection and segmentation problems in computer vision (CV) and to named entity recognition (NER) problems in natural language processing (NLP).

Negative samples do not typically apply to classification problems (whether in the CV or NLP domains) because, in a classification formulation, a label is always assigned. Most classification models and techniques output only labels that are elements in a discrete set of labels known ahead of time. Other classification models and methods have an implicit or explicit "other" category that samples can be assigned when other labels are not relevant. The features discussed here focus on using negative samples in non-classification problems.

Why do you use negative samples?

The primary purpose of including negative samples in training data is to reduce the false positives your model produces (increasing its precision) when making inferences on operational data. Models often produce unexpectedly and unacceptably high numbers of false positives without adequate representation of negative samples.

Without adequate representation of negative samples, we will often underestimate expected false positives and overestimate expected precision.

The fundamental cause is that negative samples must be included for many detection and segmentation problems to ensure that the distribution of the training, validation, and test datasets matches that of operational data. If the distributions do not match, the model will not perform well. It is tempting to think that non-detection regions in the positive sample chips provide enough diversity in the "background" data for the model to perform well. However, this is incorrect.

Consider detecting cars in satellite imagery. Almost always, satellite images are huge and will be "chipped" (divided into small regions with possible overlap) before being sent to the model for either training or inference. If our training data only contains chips with cars present, that biases our selection of chips and thus biases the training distribution. We will probably never include any training samples that are entirely oceans or lakes, obscured by clouds, obscured by shadow, or degraded by image artifacts. And we will likely include few, if any, chips that are entirely forests or mountains. As a result, our model will not see any training data that consists of those scenes. In contrast, during operation, the model will likely see all the chips from many large satellite images, and it will most likely have false positive problems in regions that are unlike the data it was trained on. Ultimately, without negative samples, the distribution of our operational data is out of sample with our training, validation, and testing data distributions.

Using negative samples may also provide secondary benefits to internal model representations, generalization, stability of the training process, recall, and other goals.

How do you use them?

You will include data from several broad categories when building your training, validation, and testing datasets. These categories include positive samples, negative samples, perhaps samples intentionally drawn from specific background categories (e.g., land, ocean, city, woods, mountains, cloud, etc., in the above example), and perhaps known "confuser" categories (e.g., very similar non-target objects that the model should not mark as detections, or chips that are known to generate false positives).

Annotating Negative Samples

When creating an annotation task, Chariot includes a "None of [...]" label by default to identify samples with no positive annotations. The "..." will be populated as the list of labels in the annotation task. This is the "context" for which the negative label is applied.

For example, if we are annotating for cars and trucks but not boats or buildings, then our context is cars and trucks, and the negative label will be "None of [cars, trucks]" so that when we annotate a sample as negative, we know that it is only negative in the sense of no cars or trucks. There may be boats, buildings, and other things in the sample. In a subsequent session, we may annotate an image with such labels. Optionally, this label can be excluded when creating the annotation task.

Thus, the annotation of "None" is by context, and the purpose is to enable the inclusion of known negative samples in training runs.

Configuring a Training Run

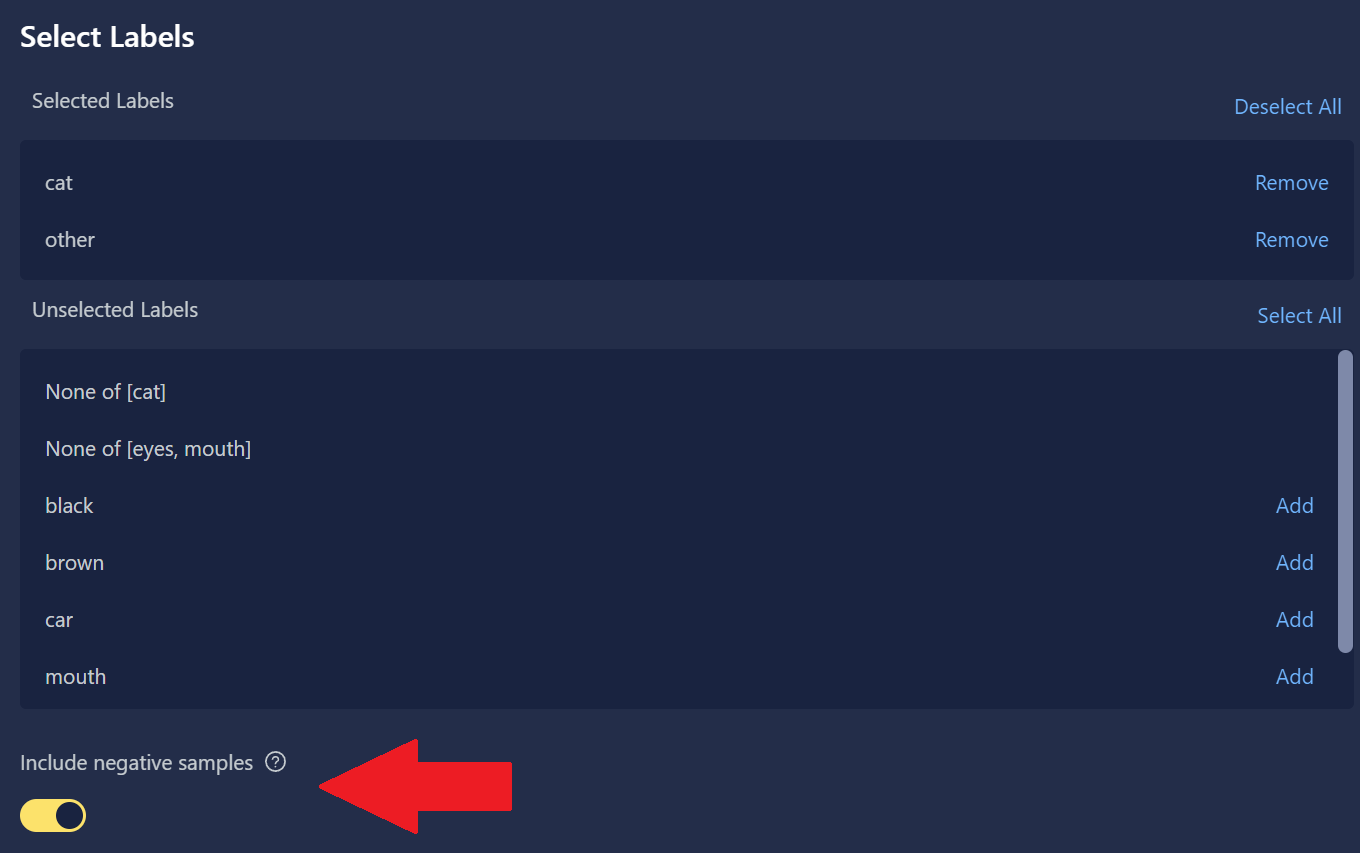

When configuring your training run(s), Chariot will list all labels within the selected training and validation datasets. In general, not all labels will be assigned targets of the model, and any samples annotated explicitly as "None of ..." are considered negative data samples. To include all unselected labels as negative samples in the training run, for both training and validation, make sure the toggle shown below is enabled (yellow). Turning the toggle off will exclude all samples that do not have the selected labels within them, meaning that we would not include negative samples at all in the training run. Typically—but not always—negative samples should be retained; additional guidance on doing so is provided below.

How do you do use negative samples effectively?

It is usually not best to include too many negative samples in the training data when training a new model from scratch (not refinement). Doing so can result in the run failing to progress, and metrics on the validation set will remain essentially zero. You will usually achieve better results by starting with either negative samples filtered out or relatively few negative samples in the training data. After the model performance has progressed on this data, you can add negative samples into one or more stages of refinement runs, perhaps with a lower training rate. Often, negative samples can be added all at once. If this is ineffective, those samples can be added more gradually. It is not necessary to wait to add negative samples to the validation data; using the same validation data throughout all training runs better quantifies performance progress.

It is usually better to add only some available negative samples to the training data. When detecting cars, boats, or other rarer vehicles in satellite imagery, for example, there may be hundreds of negative samples for every positive. Training data with a more balanced class distribution often yields better-performing models. Consider starting with a negative: positive ratio of 0:1 or 0.1:1, and then, in later runs, progress to 1:1, 5:1, or maybe even 10:1. It is unlikely that ratios past 20:1 will be advantageous.

One can argue for adding all available negative samples (that don't overlap with training or testing sets) to the validation dataset for the most accurate estimate of the false positive rate (precision). However, this slows training considerably, so choose which option is more valuable.

Additional notes

-

Chariot does not have negative sample settings for classification training runs.

-

If filtering out negative settings reduces a dataset's size to zero, Chariot may generate an error in the training run. Fortunately, since all samples will be filtered out, removing that dataset from the run is an easy workaround.

-

Chariot training data logs record the number of samples in each dataset before and after filtering for (or retaining) negative samples.

-

Configuring negative sample selections in the user interface causes Chariot to modify the training run configuration. You can use Advanced Mode to compare the configuration JSON files of training runs that are identical except for negative sample settings