Exporting a Model

Just as Chariot can ingest models from the outside world, it is important to be able to export models out of Chariot (for example, if you would like to put a Chariot-trained model on an edge device).

Export Options

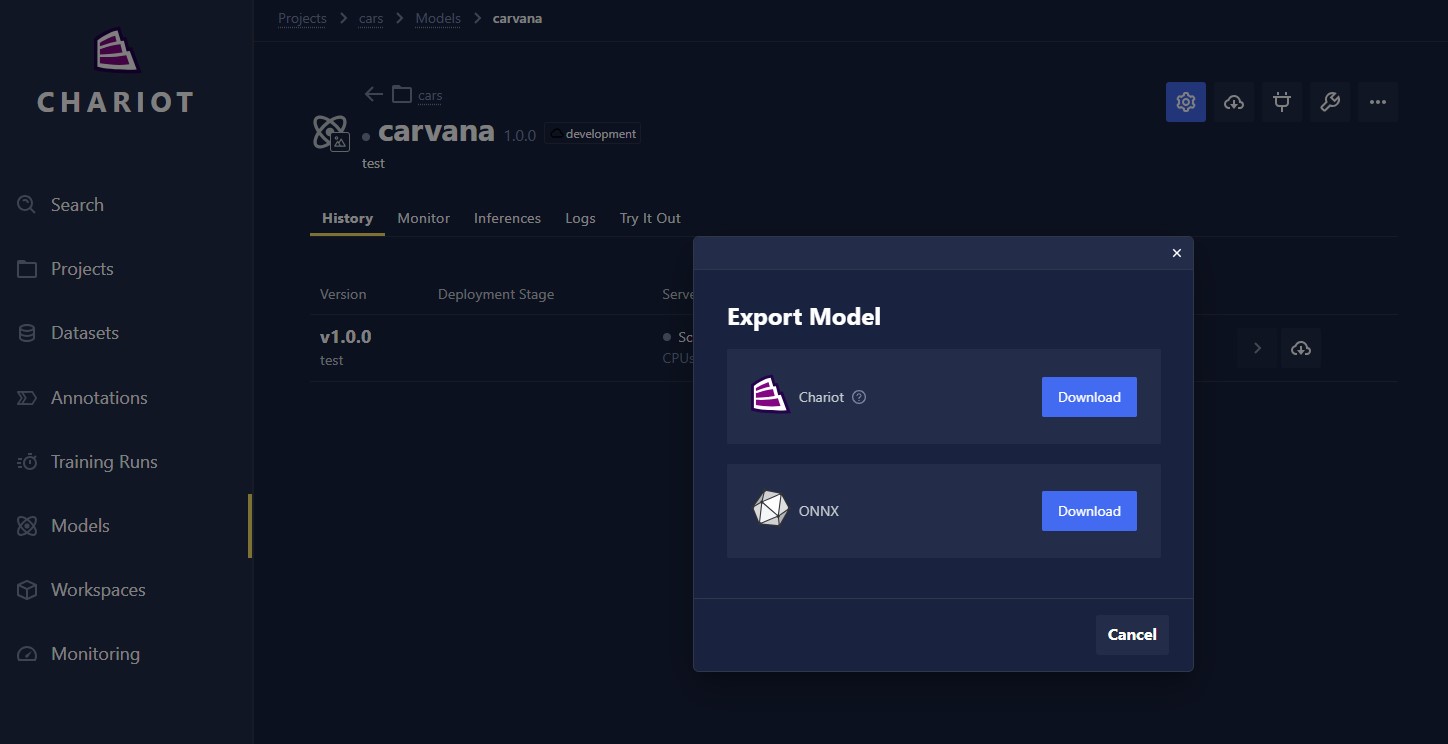

Select the Download icon, found either on a model's Model History tab or on the top of the Model page. A list of supported export formats will be provided. Click on the Download button that corresponds to your intended export format, and a tar.gz file will download.

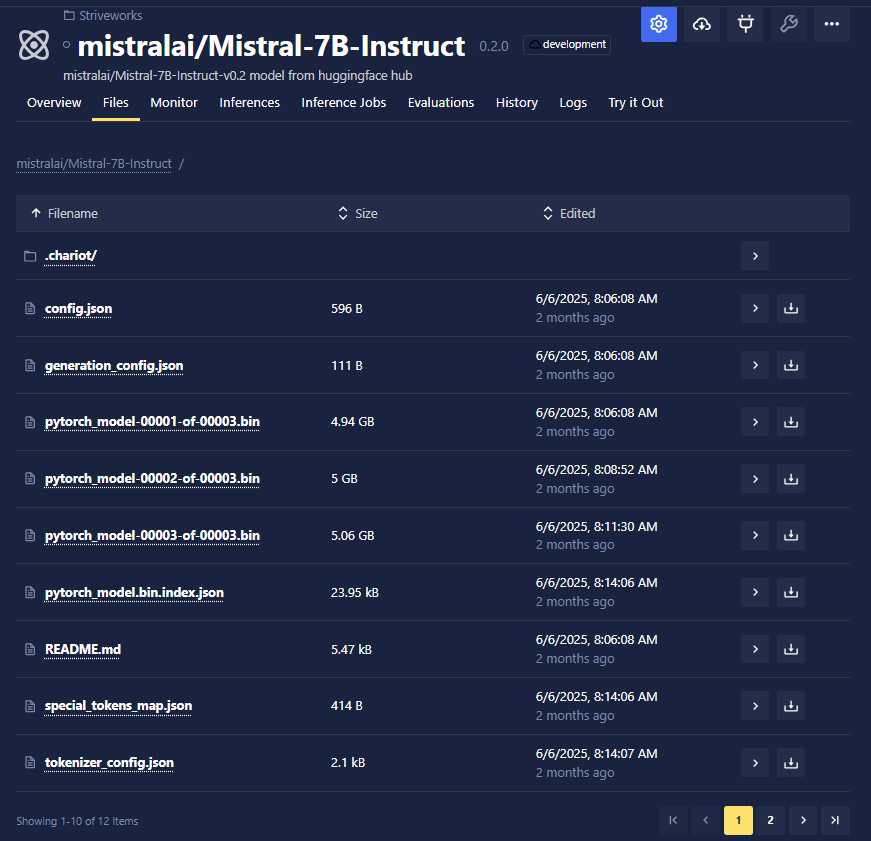

Additionally, you can use the Files tab to view all files that constitute the model object and download individual files.

Resumable Downloads

Downloading a model supports resumable downloads through the browser or the API. Browser support for resumable downloads can be found in the browser's download manager. Once you are on the download manager page, you can resume the download of the model. API support for resuming a download requires you to send the Range request header in the resume request.

For example, curl can calculate the remaining range and set the header on the download request using the -C - syntax:

curl -X 'GET' "https://<chariot-host>/api/catalog/v1/models/<model-id>/download" -H 'accept: application/json' -H "Authorization: Bearer <chariot-token>" -o model.tar.gz

...

<download gets interrupted>

...

curl -C - -X 'GET' "https://<chariot-host>/api/catalog/v1/models/<model-id>/download" -H 'accept: application/json' -H "Authorization: Bearer <chariot-token>" -o model.tar.gz

To verify that your download is complete, you can do a manual check by unpacking the archive and verifying that the files and file sizes are correct as seen in the UI.

tar -xzvf /location/of/downloaded/model.tar.gz /destination/for/output/files

Running an Exported Chariot Model Locally

The following steps demonstrate how to run a Chariot-native model offline. For other model types such as scikit-learn, Hugging Face, or ONNX models, please refer to the official documentation on their respective framework websites for guidance on running those models outside of Chariot.

- Unarchive this into a folder that will contain the model weights (as a PyTorch binary), as well as a configuration file that contains preprocessing and postprocessing information (such as image resize, pixelwise normalization, and conversion from output integers to class label strings).

mkdir mymodel

tar -zxf model.tar.gz -C mymodel

We recommend that you use a virtual environment for package isolation:

virtualenv teddyenv

source teddyenv/bin/activate

- Install our Teddy-inference wheel available from this instance of Chariot. You also need the FastAPI web server.

pip install https://%%CHARIOT-HOST%%/docs/static/teddy_inference-0-py3-none-any.whl pip install fastapi[all]==0.71.0

- Start the Inference Server.

MODEL_PATH=mymodel uvicorn teddy_inference.api:app --host 0.0.0.0 --port 8000

- To execute an inference, you can navigate to

http://localhost:8000in a web browser, click Try it out and then Choose File to upload an image. Then, click Execute and scroll down to see the response.

Alternatively, you can run the following command in the command line:

curl localhost:8000/predict -F img=@/PATH/TO/IMAGE/FILE