Enabling Inference Store

Enabling storage is simple, but has powerful configuration options to control what inferences to store—and when and for how long to store them.

Enabling Storage

- UI

- SDK

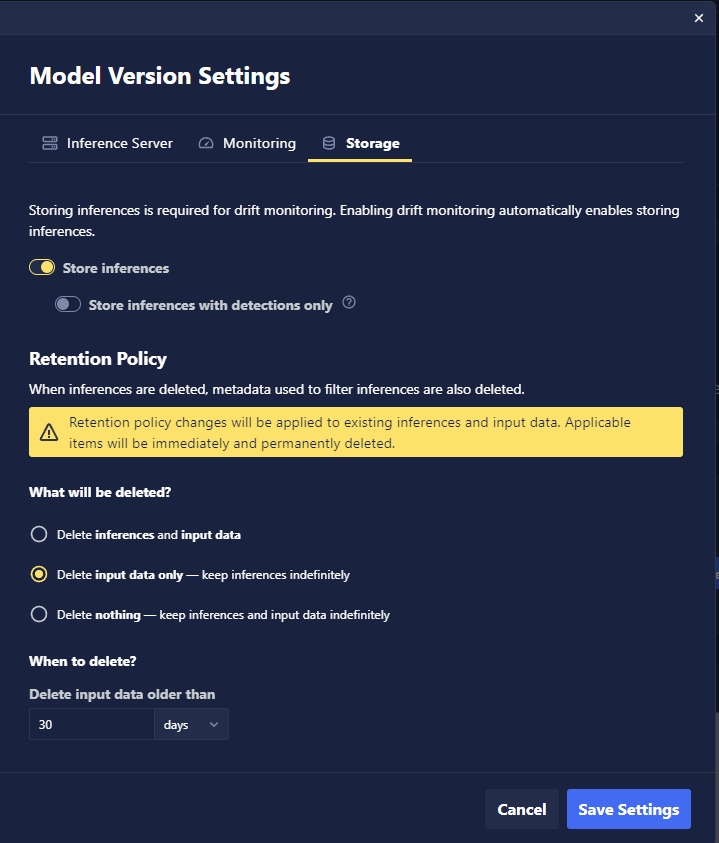

You can enable inference storage with just a single click in Chariot: Navigate to the model you wish to enable inference storage for and use the Storage tab in Model Version Settings to turn on inference storage. You can also specify how long inference and input data should be retained in the system. See Retention Policies for more details.

First, select your model and ensure that it is registered for inference storage:

from chariot.models import Model

from chariot.inference_store import register

model = Model(project_id="<your_project_id>", id="<your_model_id>")

register.register_model_for_inference_storage(

request_body=models.NewRegisterModelRequest(model_id=model.id, project_id=model.project_id, task_type=model.task)

)

Next, update the model's Inference Server settings to enable inference storage:

model.set_inference_server_settings({"enable_inference_storage": True})

Now you can begin sending inferences, and they will be stored.

Retention Policies

Retention policies are used to manage the storage footprint for specific models. Retention policies allow users to set a maximum length of time to keep data for and to specify whether to clean up the inference input data (i.e., the image inferenced on), the inference output (i.e., the prediction), or both.

- UI

- SDK

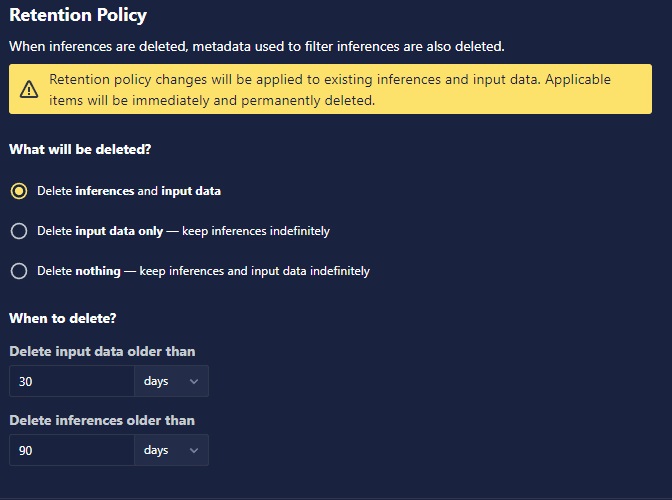

Select whether you want to:

- Delete both inference and input data after a specified time period. The retention period of inference data must be equal to or longer than the input retention period.

- Delete only input data after a specified time period and keep inference indefinitely.

- Keep both inference and input data indefinitely.

Each model will have only one retention policy, and when this policy is changed, it will immediately be applicable to past and future inputs and inferences.

Getting a retention policy for a model

from chariot.inference_store import retention_policy

retention_policy.get_retention_policy(model_id=model_id)

Creating a manual retention policy for a model

from chariot.inference_store import models, retention_policy, retention_task

# maximum_record_age (how long to keep the inference), maximum_blob_age (how long to keep the input data) and automated_interval are in hours. A value of 0 for maximum_age

# means 'delete immediately on manual requests'. A value of 0 for

# automated_interval indicates that this policy will be invoked manually and will

# not run on an automated interval.

new_retention_policy_request = models.NewRetentionPolicyRequest(

maximum_record_age=72, # 3 days

maximum_blob_age=72, # 3 days

delete_action=models.DeleteAction.SOFT,

automated_interval=0 # Will be invoked manually

)

new_retention_policy = retention_policy.create_retention_policy(

model_id=model_id, request_body=new_retention_policy_request

)

Creating an automatic retention policy for a model

from chariot.inference_store import models, retention_policy

new_retention_policy_request = models.NewRetentionPolicyRequest(

maximum_record_age=72, # 3 days

maximum_blob_age=72, # 3 days

delete_action=models.DeleteAction.SOFT,

automated_interval=24 # Run daily

)

retention_policy.create_retention_policy(

model_id=model_id, request_body=new_retention_policy_request

)

Executing a manual retention policy for a model

from chariot.inference_store import models, retention_task

new_retention_task_request = models.NewRetentionTaskRequest(

dry_run=False, retention_policy_id=new_retention_policy.id

)

new_retention_task = retention_task.create_retention_task(

model_id=model_id, request_body=new_retention_task_request

)

Getting detail about a single retention task

from chariot.inference_store import retention_task

retention_task.get_retention_task(

model_id=model_id, retention_task_id=new_retention_task.id

)

Filtering a collection of retention tasks for a model

from chariot.inference_store import models, retention_task

# Create empty filter request or add specific filters

filter_request = models.NewGetRetentionTasksRequest()

# Or filter by specific criteria

filter_request = models.NewGetRetentionTasksRequest(

state_filter=models.RetentionTaskState.COMPLETE,

time_window_filter=models.TimeWindowFilter(

start="2024-01-01T00:00:00Z",

end="2024-01-31T23:59:59Z"

)

)

retention_task.filter_retention_tasks(

model_id=model_id, request_body=filter_request

)